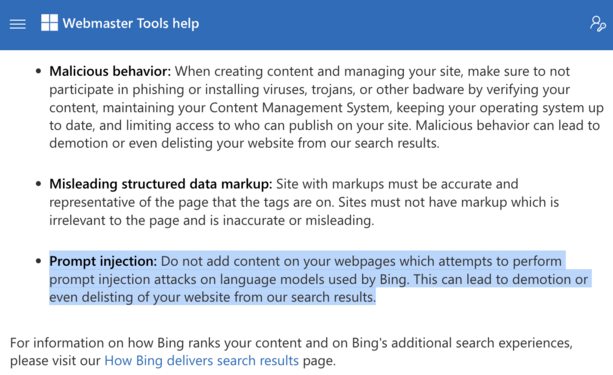

"In the nascent field of AI hacking, indirect prompt injection has become a basic building block for inducing chatbots to exfiltrate sensitive data or perform other malicious actions. Developers of platforms such as Google's Gemini and OpenAI's ChatGPT are generally good at plugging these security holes, but hackers keep finding new ways to poke through them again and again.

On Monday, researcher Johann Rehberger demonstrated a new way to override prompt injection defenses Google developers have built into Gemini—specifically, defenses that restrict the invocation of Google Workspace or other sensitive tools when processing untrusted data, such as incoming emails or shared documents. The result of Rehberger’s attack is the permanent planting of long-term memories that will be present in all future sessions, opening the potential for the chatbot to act on false information or instructions in perpetuity."

https://arstechnica.com/security/2025/02/new-hack-uses-prompt-injection-to-corrupt-geminis-long-term-memory/